"Remember that all models are wrong; the practical question is how wrong do they have to be to not be useful." —Box & Draper 1987

The man kept on talking and talking, telling me about the cat that was brushing up against his right thigh at that very moment. Eventually, I asked the man what color the trees were outside the window of his hospital room and he tilted his head slightly and described shades of deepest green. The man, a patient I had been asked to see, was comfortable and alert. He knew where he was. He could speak and understand without difficulty. His memory—for both remote events and recall of the last months that led to him being hospitalized for an operation on his failing mitral valve a week earlier—was intact. And yet, looking out his window at the southern slope of Mount Royal, covered in decidedly non-green late-October peak foliage, it wasn’t difficult to tell that something was wrong.

I had been asked to see him because the nursing staff felt he had been acting unusually in the days following his heart surgery. His primary nurse described him as a kidder—a jovial man who wasn’t much trouble. She couldn’t recall him using the call bell. But the joking belied some unusual behavior—he seemed not to know what to do with items on his meal tray and he was continually making excuses for not being able to open juice containers that came along with his meal. He didn’t complain about his difficulties. The nurse told me she helped him with the containers and felt that he had the look of someone who’d forgotten their glasses. He wasn’t getting out of bed and when he did, he moved tentatively. An occupational therapist told me she was unable to do a kitchen assessment with him because he seemed apathetic.

I examined the patient and found that he couldn’t detect my fingers moving 18 inches in front of his face. His pupils were equal and reactive. The man smiled at me throughout the entire examination, seemingly unconcerned, explaining that his inability to answer my questions about what he could see was due to the poor lighting in the brightly fluorescent-lit room. I finished examining him, and I asked him again about the color of the trees.

He was blind. But what was more unusual was that he didn’t seem to know, or at least was unable to acknowledge he was blind.

Imaging confirmed that he’d suffered a stroke that affected both occipital lobes and part of the right parietal lobe. He had Anton’s Syndrome—damage to the occipital lobes that leaves a person cortically blind (their eyes work, but the part of their brain that processes visual information is damaged) and the more curious finding of anosognosia—a denial of illness. All of this, in Anton’s Syndrome anyway, occurs in the absence of other neurological problems such as amnesia or generalized confusion or specific language problems.

The original descriptions of the syndrome also emphasized another aspect to the already strange presentation—patients with Anton’s Syndrome confabulate: in the face of obvious and severe disability (at least to an outside observer), patients with Anton’s Syndrome construct odd, obviously false and often detailed narratives that often seem intended to explain away their situation. My patient’s confident and incorrect response about the color of the leaves raised the eyebrows of the sister who came to visit him as we spoke, and the cat whom the patient reported rubbing against his leg disappeared when an adjacent pillow was moved away from him.

The questions raised by Anton’s Syndrome are among the most interesting in neurology—how can someone not be aware of losing something as vital as sight? How can a person’s narrative, the running explanation of what they are experiencing, be so deranged as to not only lose the ability to describe their situation, but to take on an alternate narrative, to create something fictional in the place of a missing autobiographical narrative?

There are varying responses to the oddness of deficits presented in Anton’s syndrome. Probably the earliest recorded description of the syndrome, and the most typical response, comes from Seneca in his Moral Letters to Lucilius where he describes what has happened to Harpaste, his wife’s former nursemaid: “Now this clown suddenly became blind. The story sounds incredible, but I assure you that it is true: she does not know that she is blind. She keeps asking her attendant to change her quarters; she says that the apartments are too dark.”

“Why do we deceive ourselves?” Seneca muses to Lucilius after this, and we are left to think that he has concluded that the woman’s problem is obstinate denial in the face of what any reasonable, self-aware person would regard as catastrophe. Intuitively, it’s hard not to disagree. (Although the notion of a Stoic philosopher being upset that someone has insufficiently acknowledged their own personal catastrophe has its own irony).

Many people, family and other caregivers, will consider patients with Anton’s Syndrome as simply delirious (they aren’t) or, like Seneca, dismiss them as dim and duplicitous—deceiving themselves as well as others. Even medical professionals have difficulty coming to terms with the concept of anosognosia—a term coined by Anton to denote a lack of awareness of one’s deficit. The medical students who spend a couple of weeks on the neurology service are electrified by the oddity of someone who is unaware that they are blind, but their interest often stops there. These patients are reduced to just another exhibit in the emporium of incredibly odd cases that cluster in neurology. But other trainees, such as neurology residents, who are working towards a deeper understanding of the nervous system, are often genuinely troubled by Anton’s Syndrome. They have seen how other patients who experience visual loss—even minimal distortions—report these in great detail and without delay. They’ve committed themselves to studying the nervous system and internalized the (admittedly grandiose but still essentially correct) notion that the brain is the most complex and miraculous physical entity in the universe. For these specialists-in-training, to have a patient who lacks awareness that they are blind could indicate a person who is in denial, or simply lying—and neither prospect is particularly interesting for a neurologist. Another alternative, even more troubling to the trainees I supervise, is that it could mean that the system on which their patient’s consciousness was based was rather more rickety and corruptible than one would expect from the most miraculous entity in the universe.

Or it could be that the way we’ve been taught the nervous system works is simply wrong.

A traditional view of nervous system function—influenced by the writings of Plato and Descartes and buttressed by Behaviorist theories that by mid-20th century dominated the field—held that sensory information arrived passively, akin to impressions falling on our sense organs. The impulses generated by our sense organs were then transmitted to the brain where there was a ‘making sense’ of the data, based on how it matched up with previous experience or similarity to other prototypes, and this constituted perception. Based on these perceptions, emotions and actions were then selected and movements initiated by motor commands. This is referred to as a ‘bottom-up’ model, and using it to explain cognitive function made a lot of sense with the way the brain and the nervous system were coming to be understood. One of the earliest and best described functions of the nervous system is the stretch reflex—an elegant, two-neuron wiring diagram that shows information flowing into the nervous system through sensory nerves to synapse in the spinal cord with a motor neuron that sent information back out to become action—all of which seems to typify a very plausible bottom-up model. The simplicity, and the utter practicality of the stretch reflex in helping to make diagnoses left a deep impression on generations of doctors who trained as neurologists— it became tempting to think of the nervous system as a complex system of understandable elements, a scaled-up hierarchy of reflexes that reinforced the validity of a ‘bottom-up’ system.

But as much as the ‘bottom-up’ approach offered, it failed to explain other phenomena neurologists saw in their everyday working lives. The vivid visual hallucinations of the Parkinson’s patient, the migratory sensory symptoms that occur in patients labeled as hysterical, the lack of blindness-awareness in Anton’s syndrome—none of this made sense in a system organized in a bottom-up way. And so, when faced with something we don’t understand, we retreat to a stance like Seneca’s, we dismiss or disparage, reactions designed to allow disengagement from situations where we don’t have to admit a larger failure.

A second model does exist, however, with its origins in Aristotelian and, to some degree, in Kantian thought, emphasizing that the brain actively seeks information it predicts may be present in the environment based on a certain intention. The inference (or idea, or prior, or hypothesis) is propagated to a point where certain neurons compare bottom up information and top down predictions to form a prediction error. The prediction error signal—the gap between the expected and the delivered, weighted in line with expectation—is what is propagated ‘up’ to inform the next inference.

It is a system that still relies heavily on sensory input, but more as a substrate for error-testing each inference to eventually arrive at a new, updated belief rather than using sensation as an initiating event. In the nineteenth century this model was most effectively conceptualized by Hermann von Helmholtz, the German physician-scientist who proposed that unconscious inference—the process of generating hypotheses informed by experience and continually testing these hypotheses—was the most plausible model for perception, and further elaborated that the entire system was designed to reduce an organism’s surprise while negotiating the challenges of an ever-changing and often hazardous environment. Helmholtz, understanding that any proposed system had to be both hierarchical and flexible to incorporate learning, proposed that multiple representations and action plans needed to be represented for any situation. Helmholtz’s concept of unconscious inference evolved into what is now referred to as Predictive Processing, a model that, by incorporating notions of Bayesian inference—statistical likelihood of a hypothesis—has come to influence thinking not only in cognitive neuroscience, but artificial intelligence, robotics, and philosophy.

Simply put, a top down model of brain function, with predictive processing as the most robust example of this, sees the brain as a Bayesian inference engine, a relentless creator and tester of hypotheses about the world. Inside us, silently and continuously, multiple ideas about our environment are advanced and tested, assigned probabilities, their error signals weighted with precision, until the explanation (or occasionally, more than one explanation) for experience emerges that best minimizes prediction error. And while this at first glance this may seem unnecessarily complicated, it should be remembered that predictive strategies actually maximize the efficiency of information transfer—predictive processing is the basic principle used in data compression—by giving feedback that only corrects the errors in what has been predicted.

Predictive processing casts a new light on phenomena that were difficult to understand using a ‘bottom-up’ conception of cognitive function. The shapes that ‘appear’ in optical illusions and the pervasiveness of the placebo effect are examples of phenomena heavily dependent on prior expectations. Delusions—the strongly held but demonstrably false beliefs held by individuals can, using the predictive processing model, be understood as errors in inference reinforced by altered error-signaling (a coarse analogy of this altered error signaling would be the example of search engine confirmation bias, sending the conspiracy theorist back more frequently to spurious ‘evidence’ that affirms their initial, unfounded suspicion).

In a predictive processing paradigm, the possibility of being unaware of a loss of previous-held awareness becomes not only tenable, but intuitively understandable. Every process, according to the model, whether it is a thought, a perception, or an action begins with an inference. An inference that is never made (because the part of the brain that generates that inference is damaged) can never be subjected to the rigors of inference-testing. The untested hypothesis is never confirmed, never refuted. It simply does not exist, akin to an argument that is never made.

Anton himself—and I like to think he was troubled by what he saw as he tried to come to grips the way in which the anosognosia demanded new ways of thinking about perception—alluded coincidentally to a loss of inference in his original description of one of the patient’s he saw with visual anosognosia: “The patient was unaware of this loss of vision. The defect did not incite reflection or conclusions, nor sorrow, nor loss of pleasure.”

According to a predictive processing model, the patient with Anton Syndrome I saw years ago, who could not see fingers moving in front of his face and was unable to understand what this meant, could have lost the ability to make inferences about the state of his vision. He had an inference-of-vision-impairment along with being visually impaired. Without this inference—‘I am blind’—the process of discovery regarding blindness stops, because it never really starts. Some have argued that patients with Anton’s Syndrome may be having hallucinations, but visual hallucinations in the blind—called Charles Bonnet syndrome—are well-formed and detailed instead of the approximate guesses of Anton’s Syndrome and those with Charles Bonnet are well aware of their blindness and that their hallucinations are not ‘real.’

The visual demands of the world persist, however, for the vision-impaired and those with inference-of-vision-impairment alike, whether it is trying to negotiate the obstacles in the room or answering a neurologist about the color of the leaves on the trees or making sense of other experiences, and the patient with Anton’s Syndrome is left to make inferences based on what information they have—if you are going to offer a guess about the color of leaves on a tree, if there are any, then green is the right answer 90% of the time. The classic description of the narrative produced by a patient with Anton’s Syndrome is referred to as confabulation—a narrative characterized by its personal nature and its obvious falsity. (The other narrative in Anton’s syndrome—the absence of a personal narrative about blindness—is actually the more glaring example of a dys-narrative state, but is forgotten in the excesses of confabulation.)

Perhaps a more inclusive definition of confabulation would be the personal narrative that continues when information necessary for autobiographical veracity is corrupted—whether it be unavailable, distorted or imprecise—due to memory or perceptual deficits. The narrative continues with all the sense of agency, of authorship, maintained. This is my story, even when the falsity of the narrative is pointed out by outsiders, the confabulator typically maintains its truth—and I’m sticking to it. What is left is narrative with authenticity shorn of objective veracity, which to an outside observer represents a shift from autobiography into inadvertent fictionalization.

In her essay, “The White Album,” right after dropping perhaps her most aphoristic of lines about telling stories in order to live, Joan Didion produced a succinct description of the cognitive process of writing that echoes the concept of a predictive processing model:

“We interpret what we see, select the most workable of multiple choices. We live entirely, especially if we are writers, by the imposition of a narrative line upon disparate images, by the “ideas” we have learned to freeze the shifting phantasmagoria which is our actual experience.”

We interpret, we select, we live entirely by imposition—the words that Didion uses could hardly be more synonymous with the making of inference, and they capture what we know intuitively about artistic creation in general and narrative in particular. What is narrative if not a series of inferences, presented linguistically, subjected to the same hierarchical, parallel, continually updated testing process as other cognitive acts?

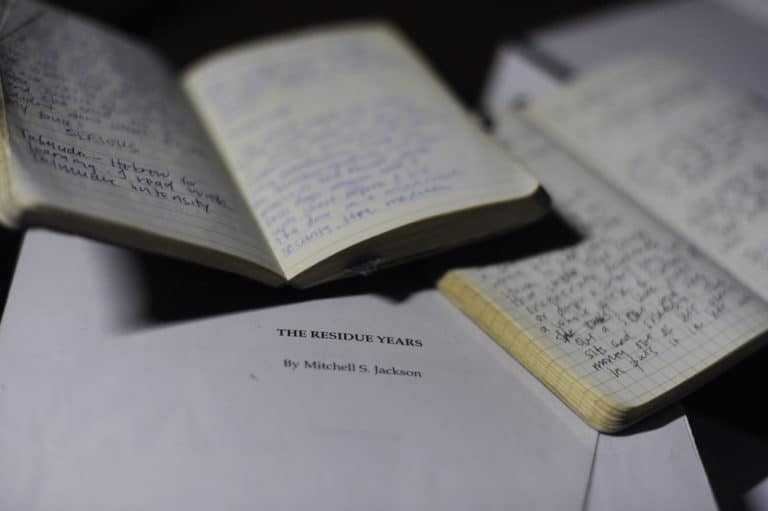

I first read about predictive processing over ten years ago as I was in the midst of editing my first novel. A manuscript sat in front of me—my editor’s initial edits—and my heart sank a little as I noted how the blue pencil markings on the page seemed to camouflage the native typescript. It seemed that every choice I had made in a manuscript that I considered to be already pretty damn good had been subjected to a strict analysis and been found lacking in some way. I remembered reading that VS Naipaul submitted his books not expecting, nor accepting, editorial suggestions. While I may have my delusions, being Naipaul is not one of them, and I started on page one of revisions.

But reading about predictive processing at the same time I was revising a novel was more than a lucky coincidence—it was a source of solace during the process of editing. The thought of a brain constantly making educated guesses about the world was a satisfying melding of concept and act. Predictive processing made me reflect in a way I would not have otherwise done on the process of writing, and I remembered Didion’s words, the exhausting privilege of the act of writing, as I recalled how some parts of the novel had come into being so effortlessly, seeming to appear on the page almost fully-formed whereas other sections seemed wrought from a different, more difficult, place, a process seemingly accompanied by the constant clang of a blacksmith’s hammer.

It made clear to me that my choices as a writer—from the larger thematic choices, to the decisions about the creation of theoretical personalities called characters or choices about tone, to the temporal arrangements of these theoretical events to heighten tension and even particular instances of word choice—all amounted to inferences that I had felt were most likely to create outcomes that gave me (and I hoped others) pleasure. In that sense, the pages of revisions weren’t challenges to my authority but error signals, the bottom-up notifications that signaled a gap in plausibility or coherence or outright good taste between my initial ideas and those of my editor.

Any writer’s unspoken task during the editing process is to understand how to ‘weigh’ the editorial suggestions—in predictive processing terminology how to determine what precision to place on the error signal—and how to better inform the next inference. Many suggestions are accepted, gratefully, as the writer recognizes a misstep avoided, but there are others that require negotiation, a justification and often a thorough reworking—all iterative processes in themselves that make the writer aware again of the array of possible solutions, the multiple representations that exist for any situation that will out of necessity collapse down to the one chosen representation that exists on the finished page.

Predictive processing is a model that purports to explain not only perception but action as well (think about the movement of your arm as a series of predictions about its position, eventually fulfilled by the motor act). As such, predictive processing holds promise as being a potentially unifying theory of brain function, closing the chasm between the cognitive processes that govern ‘everyday’ perceptual and motor acts and what are sometimes regarded as the ‘higher order’ acts of imagination or creation.

This notion, that artistic creation may depend not on muses or serendipity or divine sources but instead relies on the same basic mechanisms that allow us to recognize that our ear is itchy and to scratch that itch, offends some as overly reductionist. There is a need by many to think of the creative act as somehow privileged. But we should recognize that we are “reducing” creative processes to a massively parallel, epistemically ravenous, continually updated processor, honed over millennia to impose its ideas on its surroundings for the good of its own survival. This model doesn’t mean that creative acts are not special, but it does require that we recast every cognitive act as an act of creation. Everything is special. In that sense I am less a reductionist than an elevationist.

But on a more mundane level, when it comes to trying to understand cognitive processes, predictive processing was another way of reinforcing that I am an unapologetic materialist: I believe that experience, perception, memory, action, creation all depend on states of brain. I like to think that I didn’t come to this point primarily from a particular philosophic or cultural stance, but out of a pragmatic need, and expectation, to address the problems faced by my patients. Other than attending to the man with Anton’s syndrome, I am part of a medical team called to look after people whose ability to move, or see, or create with language or have consciousness at all is imperiled by material changes in their nervous system. My job is to find a material cause and propose a material solution without delay. And so if I see a person who is suddenly unable to speak, I cannot address it as a cultural or spiritual problem or even a theoretical problem, but must address it in its purest materialist form: as a blocked artery feeding a specific part of the brain that serves language production. I do this because I am aware, from personal experience and the cumulative experience of medical literature that removal of the small blood clot that blocks that artery increases the chance of recovery of function that has been lost.

If this seems smug—like an argument from technological authority—then consider me already-chastened by those times when these efforts fail, and I am faced with having to help my patients, and their families, understand how their altered nervous systems can continue to navigate their worlds. Materialism gets real, real quick.

The offense felt at this approach by non-materialists—many of whom are superb creators of words or images or ideas—is often accompanied by a tone of derision when discussing material explanations of cognition. This results in reductionism of another, somewhat absurd, variety that is more often met in contemporary culture with Presidential handclaps than critique. From the “Givenness of Things,” Marilynne Robinson writes: “If it is reasonable to say that the brain is meat, it is reasonable, on the same grounds, the next time you look into a baby carriage, to compliment the mother on her lovely little piece of meat.”

This is an example of a recurring theme in modern western discourse, especially in relation to science or technology: the denigration of something made possible by the very thing being denigrated. Just as the spectacular success of vaccines in sparing us the ravages of infectious illnesses like polio allows for the very cultural amnesia necessary for the anti-vaccine movement to thrive, the material brain has the dubious honor of having to generate its own slander. There is a necessary affluence and obliviousness that attend any of these attacks, and an “unacknowledged brain privilege” at work with this attack in particular. But we all have our own forms of blindness of which we are more, or less, aware.

The patient I saw with Anton’s Syndrome regained some elements of cognitive function in the months following his stroke. I saw him in follow-up six months after our initial visits. Deemed unlikely to benefit from rehabilitation because of his lack of awareness regarding his deficits, he had been placed in a seniors’ residence. His anosognosia had resolved—many patients with Anton’s Syndrome do experience some improvement along these lines, but he remained severely visually impaired. He now understood he was blind. He seemed less lighthearted, describing his day in a way someone with a new, severe visual impairment would, the constant need for help, the humbling loss of autonomy. The isolation. Now that he was able to properly infer the depth of his loss, the narrative of his illness had changed, reverting from a fictionalized account to an autobiographical one. Eventually he behaved like a blind person to everyone’s satisfaction.

❦❦❦

Anton G. Über die Selbstwahrnehmung der Herderkrankungen des Gehirns durch den Kranken bei Rindenblindheit und Rindentaubheit. Arch Psychiatr Nervenkr. 1899; 32:86–127

Joan Didion, The White Album, Simon & Schuster, 1979

Marilynne Robinson, The Givenness of Things, Farrar, Straus and Giroux, 2015